Rules:

The main purpose of the harvesting module is to facilitate the process of gathering data from external data sources such as NZOR providers and synchronising that data with the provider data within NZOR.

Overview of Harvesting Module

Main Functions:

Scheduling of harvesting from OAI providers

Validation of data prior to import

Linking of provider data with system lookup tables

Persistence data to the prov tables in the NZOR_Data database

Data for import must be represented using the NZOR Provider XML schema.

Rules:

When importing a provider record that already exists, the original provider record is update/overwritten with the new information

Updates should be logged to an audit table

If an update alters a Concept, the following needs to be considered:

Will this result in a concept being removed from a Consensus name – if so does this leave any hanging Names without a concept defining the parent (ie are there any names that are children of the name the concept was removed for, that do not have a provider concept themselves that defines the parent linkage – if so then on a refresh the name may be left with no parent).

Was the concept provided as a NameBasedConcept (ie there will be no ProviderRecordIDs for the Concepts and ConceptRelationships). If so, then the Concept and ConceptRelationship for the ConceptRelationshipType being updated will need to be removed and replaced with the new information – because this is a remove the above consideration will also need to be taken into account.

If an update removes a consensus record, then checks need to be done to make sure there are no other consensus records relying on the removed consensus record.

Business Logic Diagrams:

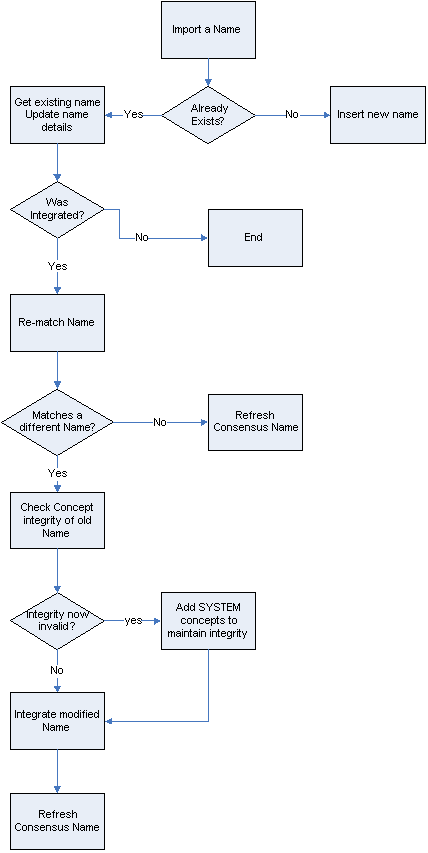

Import Name:

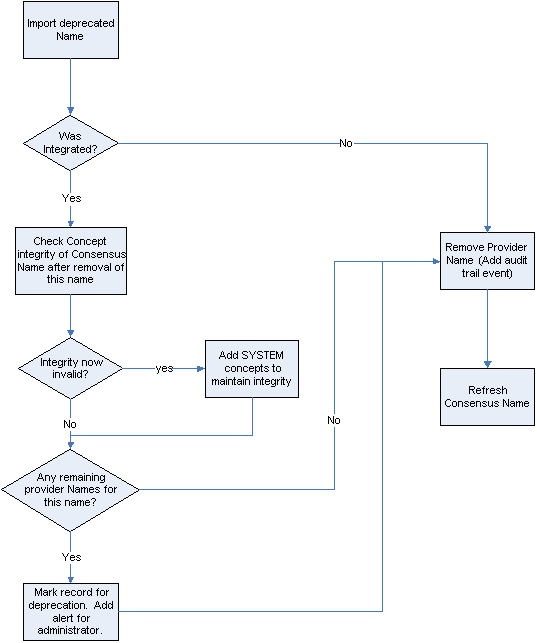

Deprecated Name:

There are several ways that Concepts can be provided by a provider – either as full TaxonConcepts or as NameBasedConcepts.

These two types of concepts roughly map to taxonomic concepts and nomenclatural concepts, where nomenclatural concepts are the name relationships as defined by the circumscription of the name, following nomenclatural codes, and taxonomic concepts are subjective relationships. For this reason you can have two "types" of Parent Name – one nomenclaturally defined, the other a subjective placement of the name in the taxonomic hierarchy.

The full taxonomic concept model is as follows:

Taxon NameTaxon NameTaxon Concept

Relationship Type

Concept Relationship

Taxon Concept

Example:

Name, ID = N1, Aus bus

Concept, ID = C1, for N1 according to A1

Concept Relationship, ID = CR1, C1 has parent C2

Concept, ID = C2, for N2 according to A1

Name, ID = N2 Aus

Reference, ID = A1, "Flora of New Zealand …"

Where all parts are required, and with IDs that the provider must maintain.

The NameBasedConcept (NBC) has the following structure:

Name Based ConceptTaxon Name

(Accepted)Taxon Name

(Parent)Taxon Name

Name, ID = N1, Aus bus

NBC, N1 has parent N2 according to A2

Name, ID = N2, Aus

Reference, ID = A2, "Flora of New Zealand …"

Where only the Name IDs (and Reference) are maintained.

The problem comes when trying to import these name based concepts into the NZOR data (which has the structure of the first example). We need to either put the NameBasedConcepts into the N-C-CR-C-N structure, generating temporary IDs or create more tables to handle NameBasedConcepts. The former option has been adopted.

On Import of Name Based Concepts:

For the Name the Name based concept is provided for, remove all Concepts and Relationships of the same type (ie if ParentName is provided then remove the concept/relationship of that type, if preferred is provided remove that etc) The According to is ignored in this case because you can only really have one name based concept for a given Name.

Re-insert the Concepts and Relationship for the NameBasedConcept that is provided

The ConceptRelationship.ToConceptId should point to another Concept with the same Name and according to (this may have been provided via a NameBasedConcept or a full TaxonConcept – this shouldn't matter).

Flag that these concepts were generated from a Name Based Concept.

Use Case:

MAF submit list of names in BRAD. 60% are matched by NZOR records. 40% are either garbage or good names not in NZOR. NZOR quesries global sources (GNI, CoL, CoL Regional Hub, GNUB (IF/Zooobank) for hits. Returns are stored in global cache and guid minted/propageted. New matching records returned to consumer. Implied preference of providers (stop when get return) > CoL, GNUB, GNI. What to do with multiple hits? Assumed consumer workflow is 1) submit list of nonmatches, 2) examine returns to identify those required, 3) resubmit with 'cache' flag switched on.

Q. two processes? First match NZOR, provides 0 hits, 1 hit, multiple hits. Consumer workflow required for 0 and multiple hits. 0 hits (excluding garbage removed by consumer workflow) resubmitted as query to global service interface.

Service response to provide link to result set. Consumer polls end point. Gets 'not ready'/'don't know' or CSV/structured data.

Distribution: - for both existing NZ names, and global cache names, query global soures for distribution data (TDWG L4). GBIF for records in network (keep just list of countries), and CoL data (10% of CoL has distribution data). Return and integrate into NZOR record.

In web interface batch match have choise: NZOR of Global Resolution Service? In latter case the results are not kept in global cache. Consumer needs to specifically request resturns to be brokered within NZOR and cached.

Need to consider if need login/security for this (and also so consumer token can be used to track requests/usage).

In both case treat Global Cache as a set of provider records (CoL as provider, GNI as provider etc). cached records to be linked into CoL management hierarchy if possible (IF SOIRCE PROVIDES HIGHER CLASSIFICATION), IF NOT THEN PROVIDER RECORD 'FLOATS'.

initial query | web service + validation? + return data structure + flags to cache or not |

global harvest from multiple sources | maintain list of end points and necessary query formats and handle returns. |

integration | put return data in global cache (in name cache), link to hierarchy (maybe), trigger harvest into integrator, generate GUIDs |

maintenance | shceduled re-query and replace/update? |

geodistribution |